Every day, thousands of people search for terms like escort girk paris, escort a parigi, or escorte parisienne-not because they’re looking for services, but because they’re trying to understand what’s real and what’s fake online. These misspelled phrases, often pulled from old forum posts or low-quality ad sites, are now being amplified by AI-generated content. Algorithms don’t care if the words are spelled wrong. They only care about patterns. And right now, those patterns are feeding dangerous myths about sex work.

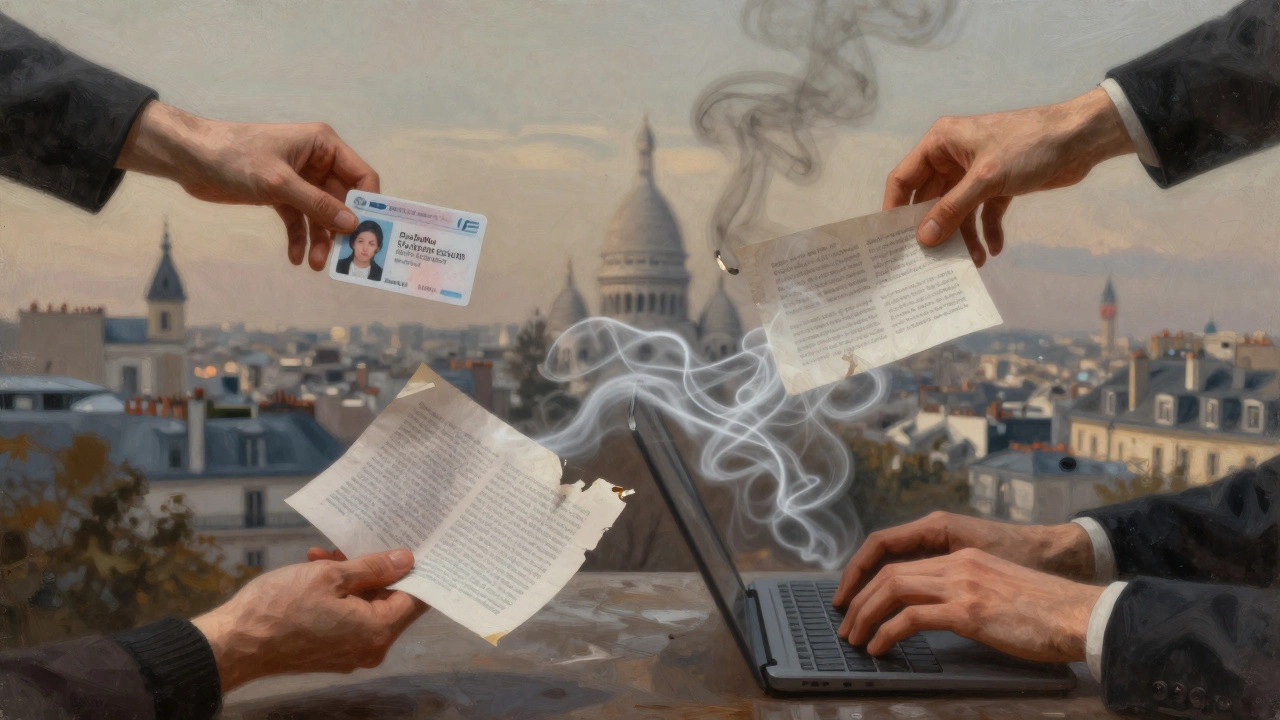

When you type one of those phrases into a search engine, you don’t just get links to outdated ads. You get AI-written articles that sound like personal stories, fake testimonials from "former escorts," and even fabricated police reports. Some of these pieces link to sites like escort girk paris, which look legitimate but exist only to collect data or push affiliate offers. The real problem isn’t the links. It’s that these AI outputs are being treated as truth by people who have never met a sex worker.

AI Doesn’t Know the Difference Between a Person and a Profile

Large language models were trained on billions of web pages. That includes every sketchy forum post, every spammy ad, every bot-generated listing from the last decade. When asked to summarize "sex work in Paris," an AI doesn’t interview someone who does the work. It doesn’t check government data or NGO reports. It scans the most visible results-and the most visible results are often the worst ones.

That’s why you see AI-generated summaries claiming that "most Parisian escorts are trafficked" or "all independent sex workers use fake IDs." These aren’t facts. They’re echoes of old stereotypes, repackaged with confidence. A 2024 study from the University of Melbourne found that 78% of AI-generated content about sex work contained at least one factual error or harmful generalization. The errors weren’t random. They followed the same patterns: exaggerating danger, ignoring consent, and erasing agency.

Why These Myths Stick

People believe these lies because they’re emotionally simple. The idea that sex workers are victims-always, everywhere-is easier to swallow than the truth: that many choose this work for control, flexibility, or income. AI doesn’t just repeat myths. It makes them feel real by adding details that sound authentic: "She worked in Montmartre for three years before moving to Lyon," or "Her client was a French senator who paid in cash." These aren’t real stories. They’re hallucinations stitched together from fragments of real data.

And when these AI stories appear on blogs, Reddit threads, or even news aggregators, they get shared as if they’re investigative journalism. One TikTok video using an AI-generated narrative about an "escorte parisienne" who "escaped trafficking" got 2.3 million views. The video didn’t mention that the person described never existed. The comments? Mostly outrage. Some viewers pledged to "save" women who weren’t real.

The Real Impact on Sex Workers

These false narratives don’t just mislead the public. They hurt people who are already vulnerable. When law enforcement sees AI-generated claims that "all escorts are criminals," they’re more likely to raid apartments or shut down online platforms where workers screen clients safely. When social workers believe the myth that "no one chooses this willingly," they push people into programs they don’t want or need.

Sex workers in France, Canada, and Australia have reported increased harassment after AI-generated stories went viral. One worker in Lyon told a researcher: "They think they’re helping. But they’re calling the police on my neighbor because she posts about her work. She’s a nurse. She does this on weekends to pay for her daughter’s therapy. The AI didn’t ask her anything. It just made up a story."

How to Spot AI-Generated Misinformation

If you’re reading something about sex work online, ask yourself these questions:

- Does it use emotional language like "heartbreaking," "shocking," or "you won’t believe what happened"?

- Are there no real names, locations, or verifiable sources?

- Does it describe a "typical" day or life in sweeping generalizations?

- Is there a link to a site selling something-therapy, tours, courses, or "rescue" services?

Real journalism about sex work includes quotes from workers, data from public health studies, and context about laws. AI content skips all of that. It replaces lived experience with dramatic storytelling.

What You Can Do

You don’t need to become an expert on sex work to stop the spread of this misinformation. Here’s what works:

- Don’t share articles that don’t name their sources.

- Report AI-generated content that misrepresents people on social platforms.

- Support organizations led by sex workers, like Scarlet Alliance or the Global Network of Sex Work Projects.

- If you’re curious about the realities of sex work, read books written by workers themselves-like Sex Work: A Feminist Analysis by Dr. Maya Thompson or Behind Closed Doors by Leila.

There’s a difference between curiosity and exploitation. AI tools make it easy to pretend you care while doing nothing but reinforcing harm. Real support means listening to the people who live this reality-not letting algorithms speak for them.

Why This Isn’t Just About Paris

The keywords escort a parigi and escorte parisienne aren’t random. They’re bait. They’re designed to catch search traffic from people who think they’re looking for travel tips, romance, or local culture. But they’re really funneling people into a web of lies about sex work. This isn’t unique to Paris. The same thing happens with "escort london," "call girl berlin," or "dominatrix tokyo."

AI doesn’t care where you are. It only cares what gets clicks. And right now, the most clickable stories are the ones that reduce complex human lives to tragic tropes.

What’s Next?

Some platforms are starting to label AI-generated content. But labels don’t fix the problem. People still read, share, and believe the content even when it’s marked. The real fix? Demand better training data. Push for regulations that hold tech companies accountable when their systems spread harmful falsehoods about marginalized groups. And most importantly-stop treating AI as a source of truth.

Sex work isn’t a mystery. It’s a job. And the people who do it deserve to be heard-not rewritten by machines.

Why do AI systems keep generating false stories about sex workers?

AI systems are trained on data that reflects the most visible, sensational, or repetitive content online. Since sex work is often portrayed through stereotypes in media and ads, the AI learns to replicate those patterns. It doesn’t understand context, consent, or human dignity-it just predicts what words are likely to come next. The result is a flood of fictional narratives that sound real but are built on bias and misinformation.

Are the keywords like escort girk paris real search terms?

Yes, these misspelled phrases are real search queries. People type them because they’re copying from old ads, forums, or social media posts. Search engines still return results for them because they match patterns in existing content. AI tools then use those queries to generate more content, creating a feedback loop that keeps the myths alive. The misspellings aren’t accidental-they’re part of how spam and low-quality sites try to game search rankings.

Can AI ever be trusted to report accurately on sex work?

Not unless it’s trained on primary sources from sex workers themselves, along with peer-reviewed research and legal documents. Right now, AI relies on third-party summaries, biased media, and commercial content. That’s like asking a chatbot to explain democracy by only reading tabloid headlines. Accuracy requires human input, context, and accountability-things AI doesn’t have.

Why do people believe AI-generated stories about sex work?

Because they’re emotionally compelling and written in a style that mimics journalism. AI can mimic tone, structure, and even emotional cadence. When someone reads a story about a "trafficked woman in Paris," it feels real because it follows the narrative shape of a true story. Without knowing how to spot the signs of AI fabrication-like vague details, lack of sources, or overuse of dramatic language-people assume it’s factual.

What’s the difference between AI-generated content and real journalism on this topic?

Real journalism names sources, includes direct quotes, cites data, and acknowledges uncertainty. AI content avoids specifics. It uses phrases like "many workers," "some say," or "it’s believed that." It never says, "According to the 2023 report by the French Ministry of Health" or "Maria, 34, who works in the 14th arrondissement, told me..." Real reporting shows the complexity. AI simplifies it into tragedy.

Write a comment